Image credit: Unsplash

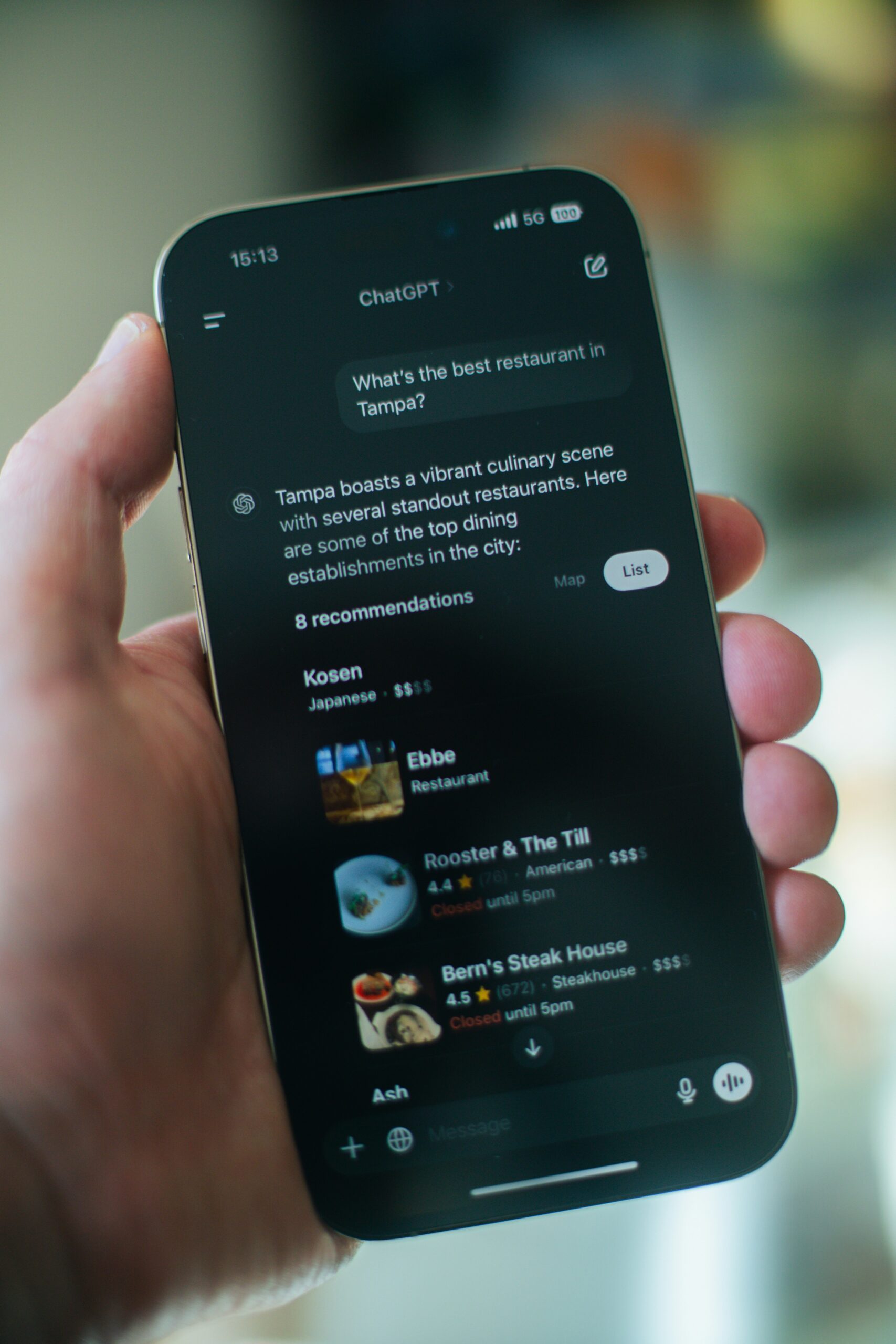

Many have noted that generative AI tends to make things up, show bias, and sometimes spit out toxic phrases. Because of this, it’s no wonder some might question its safety. WitnessAI CEO Rick Caccia is here to say that it can be trusted implicitly.

“Securing AI models is a real problem, and it’s one that’s especially shiny for AI researchers, but it’s different from securing use,” Caccia, who formerly served as the SVP of marketing at Palo Alto Networks, commented recently. He continued, “I think of it like a sports car: having a more powerful engine — i.e., model — doesn’t buy you anything unless you have good brakes and steering, too. The controls are just as important for fast driving as the engine.”

Such controls are certainly in demand among enterprises, which have legitimate concerns regarding the text limitations. Still, they remain relatively optimistic about the productivity-boosting potential of generative AI.

According to an IBM poll, AI-related roles currently make up at least 51% of the positions that CEOs are hiring for, a job that didn’t even exist until this year. However, only 9% of businesses attest to being ready to effectively manage threats, such as those pertinent to privacy, intellectual property, and other digital risks.

Caccia is aware of the issues and concludes that the WitnessAI platform effectively captures activity between the custom generative AI models that the employer uses and the employees using them. This is not to be confused with the models gated behind an API like OpenAI’s GPT-4, but instead considered something more akin to Meta’s Llama 3 as it also applies risk-mitigating policies and assurances.

“One of the promises of enterprise AI is that it unlocks and democratizes enterprise data to the employees so that they can do their jobs better but unlocking all that sensitive data too well- or having it leak or get stolen- is a problem.”

WitnessAI offers access to multiple modules, each focused on addressing a different form of risk. One module allows organizations to apply guidelines to prevent particular staffers from using AI-powered tools in ways they should not, while another redacts proprietary and sensitive information from the prompts and devises safeguards against attacks.

According to Caccia, “We think the best way to help enterprises is to define the problem in a way that makes sense — for example, safe adoption of AI — and then sell a solution that addresses the problem.” He added, “The CISO wants to protect the business, and WitnessAI helps them do that by ensuring data protection, preventing prompt injection, and enforcing identity-based policies. The chief privacy officer wants to ensure that existing — and incoming — regulations are followed, and we give them visibility and a way to report on activity and risk.”

Nevertheless, as all data passes through the platform before reaching the model, it raises complicated questions about WitnessAI. The company, however, is blatant about this and even offers tools to monitor the models, which questions are asked of the models and the responses they receive.

“We’ve built a millisecond-latency platform with regulatory separation built right in — a unique, isolated design to protect enterprise AI activity in a way that is fundamentally different from the usual multi-tenant software-as-a-service services,” Caccia stated. “We create a separate instance of our platform for each customer, encrypted with their keys. Their AI activity data is isolated to them — we can’t see it.”

Caccia’s words alleviate some customers’ fears, but many workers remain concerned about surveillance potential. Surveys confirm that most people do not particularly care to have their workplace activity monitored, despite the reason. It is also believed to negatively impact company morale.

However, Caccia is certain that there is still immense interest in the WitnessAI platform, as the company has demonstrated by raising $27.5 million from Ballistic Ventures and GV, Google’s corporate venture arm. WitnessAI’s 18-person team is expected to expand to at least 40 by the end of 2024.

“We’ve built our plan to get well into 2026 even if we had no sales at all, but we’ve already got almost 20 times the pipeline needed to hit our sales targets this year,” Caccia confirmed. He concluded, “This is our initial funding round and public launch, but secure AI enablement and use is a new area, and all of our features are developing with this new market.”